Editor’s note: Innovation Thursday – a deep dive into a newly emerging technology or companies such as our Startup Spotlight – is a regular feature at WRAL TechWire.

+++

CHAPEL HILL – As the conversation around artificial intelligence gains momentum, experts continue to tout the potential benefits of AI. However, organizations and employers may wonder how best to implement this nascent technology at their workplace. In particular, what sort of framework should organizations and employers use when considering this new technology?

The Kenan Institute of Private Enterprise posed these questions to two individuals with extensive and distinct experience in the world of artificial intelligence. Professor Mohammad Jarrahi of the UNC School of Library and Information Science researches the implications of AI for work, as well as the implications of new information and communications technology more broadly. Phaedra Boinodiris is the business transformation leader for IBM’s Responsible AI consulting group and serves on the leadership team of IBM’s Academy of Technology.

We asked Jarrahi and Boinodiris about potential concerns and issues surrounding the adoption of AI. Phaedra’s answers are informed by excerpts from her recent book “AI For The Rest of Us,” co-written with Beth Rudden and released in May.

Large language models such as ChatGPT have led to an explosion of AI-related discussion, especially over the past few months. What is the current level of AI adoption across firms, and how do you see this changing over the next several years?

Mohammad Jarrahi: OpenAI’s ChatGPT has stirred up the conversation surrounding AI, transforming it from a background technology primarily concerned by tech enthusiasts and business magnates into a topic that engages the public’s imagination. The resurgence of interest in AI, largely spurred by ChatGPT, is evident. A recent survey by Accenture indicates that 63% of organizations are prioritizing AI above all other digital technologies.[1]

Big tech companies such as Amazon, Facebook and Google have already been investing billions in various AI systems. However, I anticipate that AI technologies investments will extend to all business types in the future. As the competition in AI intensifies, I also predict that AI, often hidden in the background or “under the surface” (as phrased by Jeff Bezos)[2], will emerge from the shadows. It will become a conspicuous strategy in product delivery and marketing.

As firms adopt AI tools, how can they include their employees in the conversation around integration of the new technology, ethical considerations and potential job-related changes?

Jarrahi: Adopting strategies like the “human-centered AI” mindset can facilitate the inclusion of diverse stakeholder perspectives in an organization’s AI implementation process. Employees and other stakeholders should be actively involved in strategic decision-making concerning AI. In this strategic orientation, organizations need to emphasize that AI’s purpose is not to replace but to enhance their work. Employees can assist organizations in determining what I called the optimal symbiosis between humans and AI within various organizational processes[3], and how to restructure systems ethically and effectively. Years of research on IT implementation underscore that myopic approaches, overly concerned with efficiency and often leading to automation and workforce displacement, can precipitate long-term organizational problems. These may result in workforce demoralization or even unethical or illegal practices.

Phaedra Boinodiris: Positive reinforcement is one of the most powerful tools we have. We can use AI to help us find better ways to positively reinforce human beings and incentivize how we want humans to behave. Here are some examples to positively reinforce behaviors for responsible curation of AI:

- Ensure that leaders consistently communicate the importance of curating AI responsibly and provide clear guidance on how to do so. Leaders can recognize and praise teams that actively follow ethical practices.

- Clearly define and communicate who is accountable for addressing and mitigating any disparate impact caused by AI models. Reward individuals who take responsibility and actively work toward minimizing bias and promoting fairness.

- Encourage AI practitioners to actively involve diverse and inclusive teams in discussing potentially disparate impacts before completing risk assessment forms. Recognize and appreciate the efforts of those who foster collaboration and seek diverse perspectives.

- Establish an AI ethics leader and empower them with sufficient authority to make critical decisions, including the ability to shut down projects that pose ethical concerns. Recognize and support their role in promoting responsible AI practices.

Algorithms and AI systems have been shown to reinforce biases and structural inequities, especially when they’re trained on data that reflects these inequities. Phaedra, you mention in one of your videos that culture and audits can be a way to address the bias in AI systems; how can firms implement these approaches in practice?

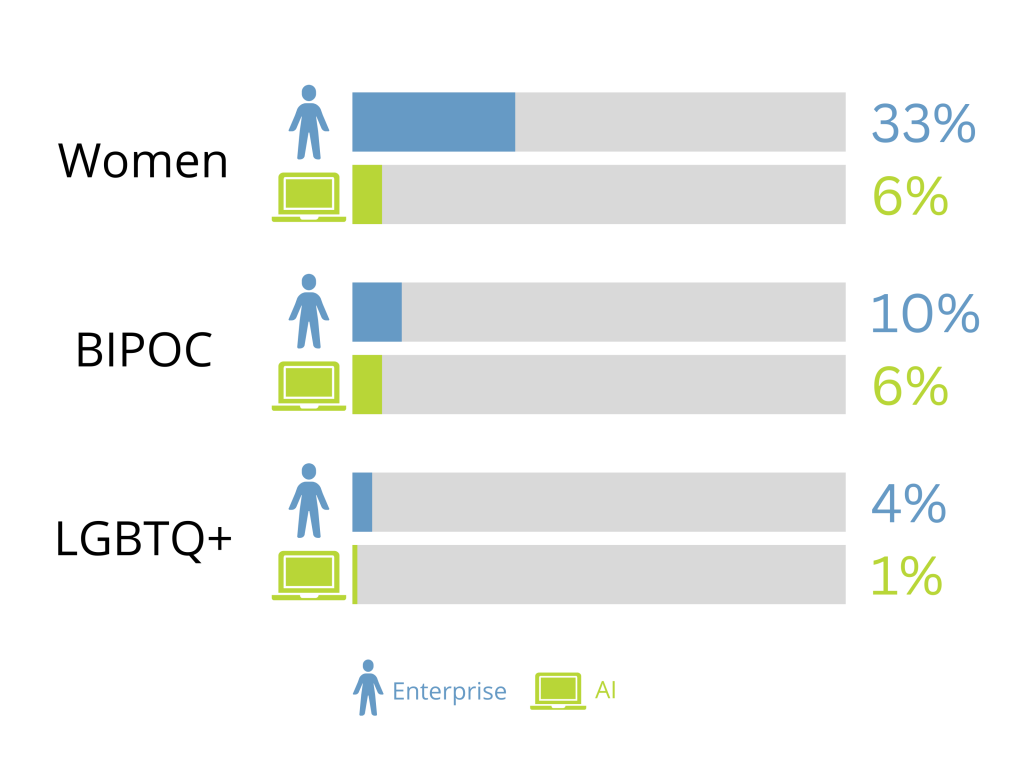

Boinodiris: IBM’s April Institute of Business Value research study published the statistic that demonstrates this diversity dichotomy within organizations’ AI teams. Women, who represent 33% of an overall organization, are only 6% of AI teams; Black or indigenous people of color, who may represent 10% of an overall workforce, are only 6% of AI teams; and, finally, LGBTQ+ team members, who represent 4% of a workforce, are only 1% of AI teams. When we have only one homogeneous represented group choosing which data is being used to train AI models that directly affect our lives, its bias gets calcified into systems – and you can rest assured that systemic inequities will endure.

Practitioners who develop AI and who work on AI governance must be diverse and inclusive to mitigate the risk of harm. Moreover, these practitioners must be responsible when handling data to make sure it’s properly representative of the underlying population, gathered with consent from ethical sources, and that it’s the correct data to make the prediction in question. But we also know that humans typically do what you do and not what you say, so how do you model the culture that you require to curate AI responsibly? We believe that organizations need to prioritize three key ingredients as part of their culture:

1. Maintaining an open, growth-oriented mindset with a heavy dose of humility. Organizations need to acknowledge that there is just as much to unlearn as there is to learn. Organizations must be open enough to look into a mirror to examine the biases that inherently exist, scrutinizing which skills and competencies are valued over others and the reasons for doing so. Are voices given unequal access to megaphones? What does it mean in that organization to be considered “technical”? What factors are in place to encourage pushback (against AI model outputs, against the predominant paradigm of “fail fast,” etc.)? Prejudice is an emotional commitment to ignorance.

2. Prioritizing diversity and inclusivity. Organizations need to prioritize making sure that teams curating the data being used to train AI models are diverse and inclusive and that they are being treated fairly and equitably. As organizations look at the makeup of their teams of data scientists, they need to ask themselves: How many women are on this team? How many minorities are on this team? How many worldviews are on this team? Are the teams that are doing the work representing the most varied slices of humanity that are available? These can be domain experts who can make sure that the widest variance of worldviews and experiences are represented.

3. Aiming for multidisciplinary approaches. Organizations need to prioritize doing everything they can to consistently message the necessity that teams be multidisciplinary in nature and offer regular opportunities for them to train together too. In prior chapters, we underscored the important role of social scientists. How is their value communicated on development teams?

How can a user of an AI system – a worker at a firm, for example – ensure that they aren’t overly reliant on artificial and not human intelligence? Additionally, how can a manager ensure that their employees aren’t leaning too heavily on AI systems to generate their work output?

Jarrahi: Automation bias is a critical concern as AI integration accelerates, leading workers and organizations to rely too much on the system. Addressing this issue requires what I term “AI literacy” at both individual and organizational levels. AI literacy encompasses not only data-driven analytical skills but also a comprehensive understanding of machine capabilities and limitations (identifying areas where human intervention is crucial). Therefore, a vital component of AI literacy involves distinguishing tasks suitable for AI and those that require human intervention. What is more, implementing AI systems mandates a process redesign, bearing in mind a “human-in-the-loop” approach. This approach significantly incorporates AI capabilities – not to replace but to augment the workforce.

Are there specific areas or tasks within a firm that should not be outsourced to AI systems, and should remain under the purview of human judgment?

Jarrahi: In AI adoption, we are likely to witness a blend of automation and augmentation. AI systems have the capacity to automate mundane and repetitive tasks formerly performed by humans. However, there remain critical areas that necessitate human involvement. For instance, humans must participate directly in high-stakes decision-making to understand the ethical and organizational implications of decisions, even if machines can make nearly optimal decisions based on past data.

AI is still task-centered, focusing on specific domains. Organizational decisions often require a holistic perspective, incorporating insights from multiple tasks (e.g., understanding stakeholders’ potential reactions or how a particular decision may further or impede an organization’s long-term strategy). In this context, the human role is crucial to contextualize AI’s task-specific decision-making. For example, in my own research I found that algorithmic inference can inform the diagnostic work of pathologists, but they still need to consider factors such as patients’ medical history, lifestyle and overall health to arrive at an informed and comprehensive diagnosis.[4]

Moreover, we are increasingly encountering what researchers term “agency laundering,” where organizations misuse the opacity of AI-driven decisions to dodge responsibility. These are the areas where human judgment is essential to ensure accountability and transparency.

Boinodiris: The purpose of AI is to augment human intelligence. The hard work must be put in to ensure that an AI model actually does this well: that it performs better than a human, that it was trained with the right representative data that has been validated by domain experts, that it does not exacerbate inequities and instead empowers people. And, when applicable, the model should earn people’s trust by providing data lineage and provenance for its outputs along with rigorous audits. If an AI model does not do these things for your firm or if a model does not offer test/retest reliability, you are taking on risk.

(C) Kenan Institute

This story was originally published at https://kenaninstitute.unc.edu/commentary/ai-in-practice-the-view-from-academia-and-industry/

SOURCES

[1] Wiggers, K. (2023, March 20). Corporate investment in AI is on the rise, driven by the tech’s promise. TechCrunch. https://techcrunch.com/2023/03/20/corporate-investment-artificial-intelligence/

[2] Lauder, E. (2017, April 19). Amazon’s CEO Jeff Bezos Explains Their Approach to AI. AI Business. https://aibusiness.com/companies/amazon-s-ceo-jeff-bezos-explains-their-approach-to-ai

[3] Jarrahi, M.H. Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Business Horizons, 60(4): 577-586. https://doi.org/10.1016/j.bushor.2018.03.007

[4] Jarrahi, M.H., Davoudi, V., Haeri, M. The key to an effective AI-powered digital pathology: Establishing a symbiotic workflow between pathologists and machine. Journal of Pathology Informatics, Vol. 13 (2022). https://doi.org/10.1016/j.jpi.2022.100156